The Apollo Chorus performed last night at the Big Foot Arts Festival in Walworth, Wis., so I haven't done a lot of useful things today. I did take a peek at the other weather archive I have lying around, and discovered (a) it has the same schema as the one I'm currently importing into Weather Now 5, and (b) it only goes back to August 2006.

Somewhere I have older archives that I need to find... But if not, NOAA might have some.

As of 17:16 CDT, the massive Weather Now v3 to v5 import had 115,441,906 records left to transfer. At 14:28 CDT yesterday, it was at 157,409,431, giving us a rate of ( 41,967,525 / 96,480 seconds = ) 435 records per second. A little more math gives us another 265,392 seconds to go, or 3 days, 1 hour, 43 minutes left.

So, OK then, what's the over-under on this thing finishing before 7pm Monday?

It's just finished station KCKV (Outlaw Field, Clarksville, Tenn.), with another 2,770 stations left to go. Because it's going in alphabetical order, this means it's finished all of the Pacific Islands (A stations), Northern Europe (B and E), Canada (C), Africa (D, F, G, H). There are no I or J stations (at least not on Earth). K is by far the largest swath as it encompasses all of the continental US, which has more airports than any other land mass.

Once it finishes the continental US, it'll have only 38 million left to do! Whee!

It takes a while to transfer 162.4 million rows of data from a local SQL database to a remote Azure Tables collection. So far, after 4 hours and 20 minutes, I've transferred just over 4 million rows. That works out to about 260 rows per second, or 932,000 per hour. So, yes, the entire transfer will take 174 hours.

Good thing it can run in the background. Also, because it cycles through three distinct phases (disk-intensive data read, processor-intensive data transformation, and network-intensive data write), it doesn't really take a lot of effort for my computer to handle it. In fact, network writes take 75% of the cycle time for each batch of reports, because the Azure Tables API takes batches of 100 rows at a time.

Now, you might wonder why I don't just push the import code up to Azure and speed up the network writes a bit. Well, yes, I thought of that, but decided against the effort and cost. To do that, I would have to upload a SQL backup file to a SQL VM big enough to take a SQL Server instance. Any VM big enough to do that would cost about 67¢ per hour. So even if I cut the total time in half, it would still cost me $60 or so to do the transfer. That's an entire bottle of Bourbon's worth just to speed up something for a hobby project by a couple of days.

Speaking of cost, how much will all this data add to my Azure bill? Well, I estimate the entire archive of 2009-2022 data will come to about 50 gigabytes. The 2003-2009 data will probably add another 30. Azure Tables cost 6¢ per gigabyte per month for the geographically-redundant storage I use. I will leave the rest of the calculation as an exercise for the reader.

Update: I just made a minor change to the import code, and got a bit of a performance bump. We're now up to 381 rows per second, 46% faster than before, which means the upload should finish in only 114 hours or 4.7 days. All right, let's see if we're done early Monday morning after all! (It's already almost done with Canada, too!)

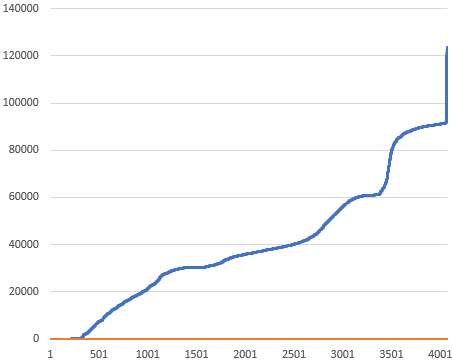

The data transfer from Weather Now v3 to v5 continues in the background. Before running it, I did a simple SQL query to find out how many readings each station reported between September 2009 and March 2013. The results surprised me a bit:

The v3 database recorded 162.4 million readings from 4,071 stations. Fully 75 of them only have one report, and digging in I can see that a lot of those don't have any data. Another 185 have fewer than 100, and a total of 573 have fewer than 10,000.

At the other end, Anderson AFB on Guam has 123,394 reports, Spangdahlem AB in Germany has 123,297, and Leeuwarden, Netherlands, has 119,533. In fact, seven Dutch weather stations account for 761,000 reports of the 162 million in the archive. I don't know why, but I'll find out at some point. (It looks like some of them have multiple weather recording devices with color designations. I'll do some more digging.)

How many should they have? Well, the archive contains 1,285 days of records. That's about 31,000 hourly reports or 93,000 20-minute updates—exactly where the chart plateaus. Chicago O'Hare, which reports hourly plus when the weather shifts significantly had 37,069 reports. Half Moon Bay, Calif., which just ticks along on autopilot without a human weather observer to trigger special reports, had 90,958. So the numbers check out pretty well. (The most prolific US-based station, whose 91,083 reports made the 10th most prolific in the world, was Union County Airport in Marysville, Ohio.)

Finally, I know that what the App has a lot of data sloppiness right now. After I transfer over these archives, I'll work on importing the FAA Airports database, which will fix the names and locations of most of the US stations.

Sunday night I finished moving all the Weather Now v4 data to v5. The v4 archives went back to March 2013, but the UI made that difficult to discover. I've also started moving v3 data, which would bring the archives back to September 2009. I think once I get that done then moving the v2 data (back to early 2003) will be as simple as connecting the 2009 import to the 2003 database. Then, someday, I'll import data from other sources, like NCEI (formerly NCDC) and the Met*, to really flesh out the archives.

One of the coolest parts of this is that you can get to every single archival report through a simple URL. For example, to see the weather in Chicago five years ago, simply go to https://wx-now.com/History/KORD/2017/03/30. From there, you can drill into each individual report (like the one from 6pm) or use the navigation buttons at the bottom to browse the data.

Meanwhile, work continues apace on importing geographic data. And I have discovered a couple of UI bugs, including a memory leak that caused the app to crash twice since launch. Oops.

* The Met has really cool archives, some of which go back to the 1850s.

I've just switched the DNS entries for wx-now.com over to the v5 App, and I've turned off the v4 App and worker role. It'll take some time to transfer over the 360 GB of archival data, and to upload the 9 million rows of Gazetteer data, however. I've set up a virtual machine in my Azure subscription specifically to do that.

This has been quite a lift. Check out the About... page for the whole history of the application. And watch this space over the next few months for more information about how the app works, and what development choices I made (and why).

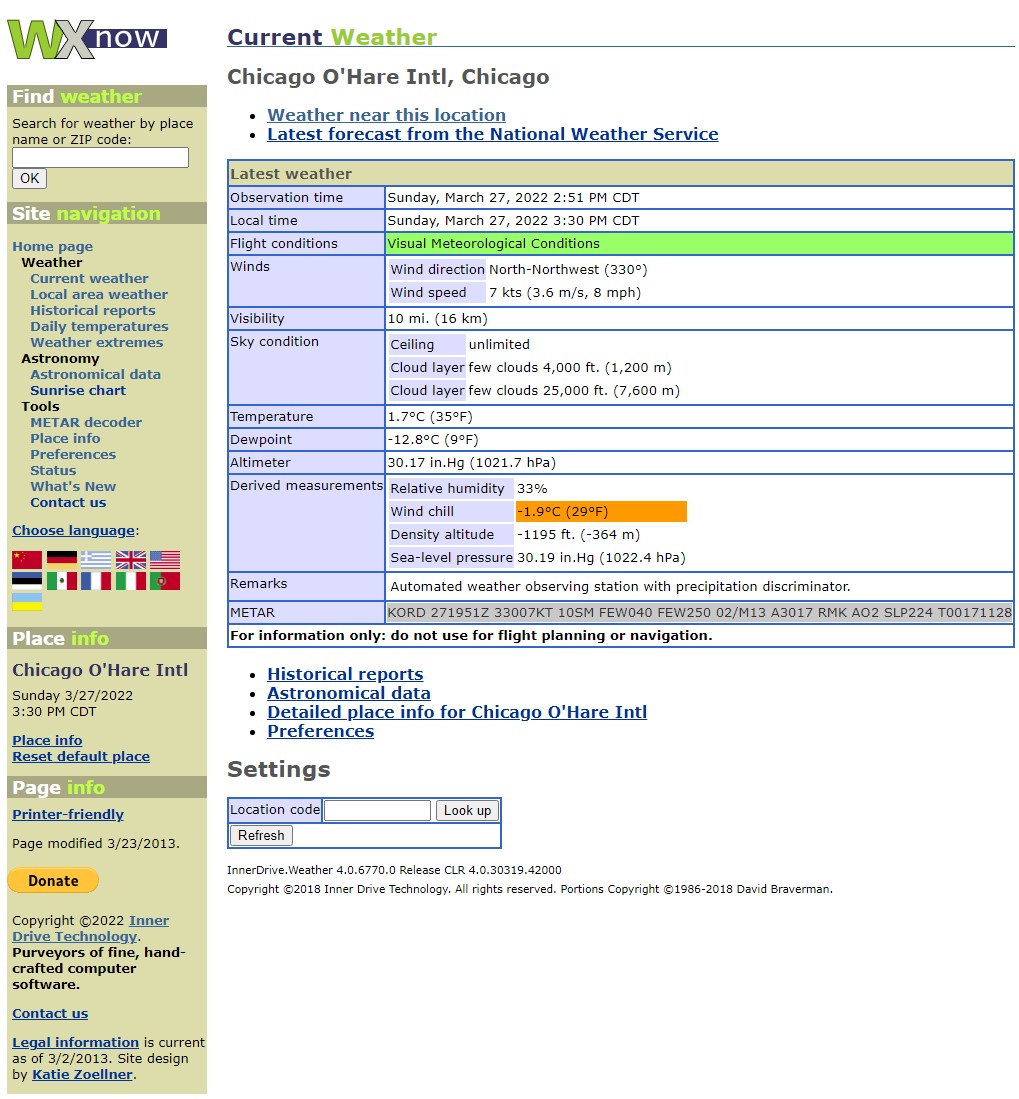

Just for posterity, here's what the v4 Current Weather page looked like:

Good night, v4. You had a good 8-year run. And good night, Katie Zoellner's lovely design, which debuted 15 years ago.

Via Bruce Schneier, a developer who maintains one of the most important NPM packages in the world got pissed off at Russia recently, without perhaps thinking through the long-term consequences:

A developer has been caught adding malicious code to a popular open-source package that wiped files on computers located in Russia and Belarus as part of a protest that has enraged many users and raised concerns about the safety of free and open source software.

The application, node-ipc, adds remote interprocess communication and neural networking capabilities to other open source code libraries. As a dependency, node-ipc is automatically downloaded and incorporated into other libraries, including ones like Vue.js CLI, which has more than 1 million weekly downloads.

“At this point, a very clear abuse and a critical supply chain security incident will occur for any system on which this npm package will be called upon, if that matches a geolocation of either Russia or Belarus,” wrote Liran Tal, a researcher at Snyk, a security company that tracked the changes and published its findings on Wednesday.

“Snyk stands with Ukraine, and we’ve proactively acted to support the Ukrainian people during the ongoing crisis with donations and free service to developers worldwide, as well as taking action to cease business in Russia and Belarus,” Tal wrote. “That said, intentional abuse such as this undermines the global open source community and requires us to flag impacted versions of node-ipc as security vulnerabilities.”

Yeah, kids, don't do this. The good guys have to stay the good guys because it's hard to go back from being a bad guy.

This weekend, I built the Production assets for Weather Now v5, which means that the production app exists. I haven't switched over the domain name yet, for reasons I will explain. But I've created the Production Deploy pipeline in Azure DevOps and it has pushed all of the bits up to the Production workloads.

Everything works, but a couple of features don't work perfectly. Specifically, the Search feature will happily find everything in the database, but right now, the database only has about 31,000 places. Also, I haven't moved any of the archival data over from v4, so the Production app only has data back to yesterday. (The Dev/Test app has data back to last May, so for about the next week it'll have more utility than Production.)

I'm going to kick the tires on the Production app for a week or so before turning off v4. I expect my Azure bill will be about $200 higher than usual this month, but my June bill should be about what my January bill is. Uploading all the archival data and the Gazetteer will cost many, many database units, and I'll keep v4 running for probably half of April, for example.

The Dev/Test version and the Production version have the same bits as of this post. Going forward, Dev/Test will get all the new features probably a week or two ahead of Prod, just like in the real world.

Plus, over the next few months I'll post explanations of how and why I did everything in v5.

I'm pretty excited. Everything from here out is incremental, so every deployment from here out should be very boring.

I just finished upgrading an old, old, old Windows service to .NET 6 and a completely different back end. It took 6.4 hours, soup to nuts, and now the .NET 6 service is happily communicating with Azure and the old .NET Framework 4.6 service is off.

Meanwhile, the Post published a map (using a pretty lazy algorithm) describing county-by-county what sunrise times will look like in January 2024 if daylight saving time becomes permanent. I'd have actually used a curve tool but, hey, the jagged edges look much more "data-driven." (They used the center point of each county.)

Now it's 22:45 (daylight saving time), and I need to empty Cassie and go to bed. But I'm pretty jazzed by how I spent a rainy afternoon on PTO. It was definitely more rewarding than tramping out in the rain to a couple of breweries for the Brews & Choos project, which had been Plan A.

I mentioned yesterday that I purged a lot of utterly useless archival data from Weather Now. It sometimes takes a while for the Azure Portal to update its statistics, so I didn't know until this afternoon exactly how much I dumped: 325 GB.

Version 5 already has a bi-monthly function to move archival data to cool storage after 14 days and purge it after 3 months. I'm going to extend that to purging year-old logs as well. It may only reduce my Azure bill by $20 a month, but hey, that's a couple of beers.

As for Weather Now 5, if all goes well, I'll start downloading weather in the Production environment today, and possibly deploy the app over the weekend. Stay tuned...