For the last couple of days, I've had trouble getting to Microsoft's Azure blog. From my office in downtown Chicago, clicking the link gives me an error message:

The resource you are looking for has been removed, had its name changed, or is temporarily unavailable.

However, going to the same URL from a virtual machine on Azure takes me to the blog. So what's going on here? It took a little detective work, but I think Microsoft has a configuration error one of a set of geographically-distributed Azure web sites, they don't know about it, and there's no way to tell them.

The first step in diagnosing a problem like this is to see if it's local. Is there something about the network I'm on that prevents me from seeing the website? This is unlikely for a few big reasons: first, when a local network blocks or fails to connect to an outside site, usually nothing at all happens. This is how the Great Firewall of China works, because someone trying to get to a "forbidden" address may get there slowly, normally, or not at all—and it just looks like a glitch. Second, though, the root Azure site is completely accessible. Only the Blog directory has an error message. Finally, the error message is coming from the foreign system. Chrome confirms this; there's a HTTP 200 (OK) response with the content I see.

All right, so the Azure Blog is down. But that doesn't make a lot of sense. Thousands of people read the Azure blog every day; if it were down, surely Microsoft would have noticed, right?

So for my next test, I spun up an Azure Virtual Machine (VM) and tried to connect from there. Bing! No problem. There's the blog.

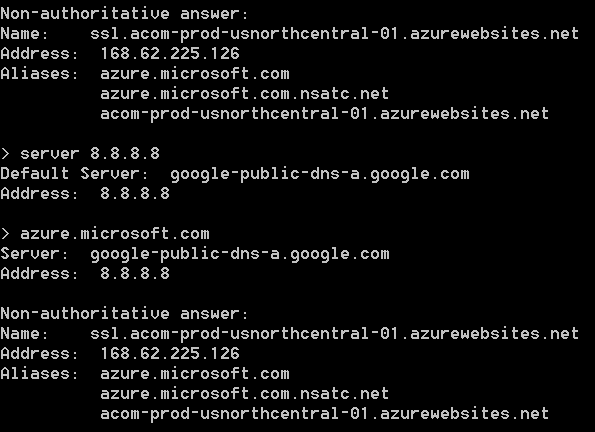

Now we're onto something. So let's take a look at where my local computer thinks it's going, and where the VM thinks it's going. Here's the nslookup result for my local machine, both from my company's DNS server and from Google's 8.8.8.8 server:

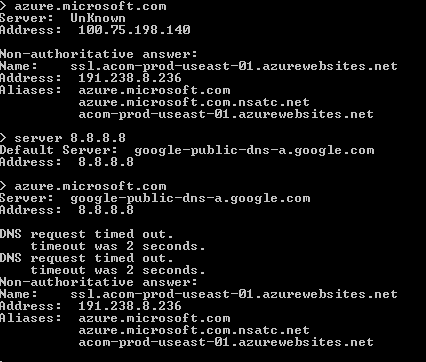

Now here's what the VM sees:

Well, now, that is interesting.

From my local computer, sitting in downtown Chicago, both Google and my company's DNS servers point "azure.microsoft.com" to an Azure web site sitting in the North Central U.S. data center, right here in Chicago. But for the VM, which itself is running in the East U.S. data center in southern Virginia, both Microsoft's and Google's DNS servers point the same domain to an Azure web site also within the East U.S. data center.

It looks like both Microsoft and Google are using geographic load-balancing and some clever routing to return DNS addresses based on where the DNS request comes from. I'd bet if I spun up an Azure VM in the U.S. West data center, both would send me to the Azure blog running out there.

This is what massive load balancing looks like from the outside, by the way. If you've put your systems together correctly, users will go to the nearest servers for your content, and they'll never realize it.

Unfortunately, the North Central U.S. instance of the Microsoft Azure blog is down, has been down for several days, and won't come up again until someone at Microsoft realizes it's down. Also, Microsoft makes it practically impossible to notify them that something is broken. So those of us in Chicago will just have to read about Azure on our Azure VMs until someone in Redmond fixes their broken server. I hope they read my blog.